Like many I received this email from GitHub a couple of

weeks ago on an old repository:

This made me think about how important security scanning is

in this day and age. Your code might have been top notch a couple of years ago,

and being dangerous today.

This is code from a couple of years ago – do you think your code from two years ago is still as good as it was back then? 😊

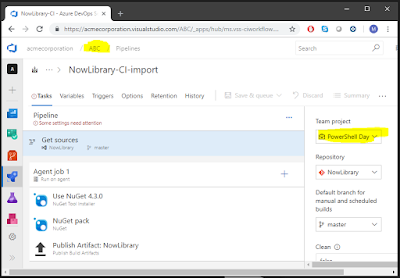

So, to have a bit of a laugh, I hooked up WhiteSource Bolt

to a build of that code to see the actual outcome on the open source libraries

used there.

WhiteSource Bolt is also free for Azure DevOps, so there is

really little stopping you from scanning your code 😊

this is the (kind of expected result):

This is code from a couple of years ago – do you think your code from two years ago is still as good as it was back then? 😊