Some months ago I wrote about the TFS Audit Log, because a big part of my daily job is about governance, regulation and access management.

This log is very, very, very verbose as it is a flat list of every single user and group into the Team Foundation Server’s ACLs. How to get some more meaningful information from it?

The basic Sort functions, together with the Text to Columns of Excel are a must in order to format it in the best possible way. You can then create a PivotTable and mix/match your data and the criteria you want to show:

This fairly basic PivotTable is going to give you this result:

This fairly basic PivotTable is going to give you this result:

It is basic, but it is something you can do in zero time. Add a chart to the mix and you have a nice (reusable, as you just need to replace the datasource, which is the whole original Audit Log. So in case you need to do it monthly you just need to replace the appropriate sheet and you’re done) report for understanding the ratio between users and group, without the need of complex SSRS reports or even PowerPivot.

Then for instance…if I want to get a text document with all the users contained in each TFS group, the only command I need to launch is tfssecurity /imx. As I have hundreds of groups it is quite…long for a manual interaction. So, I’d create a text file with all the groups - copy, paste from the original audit log, one for each line – and then launch the following command from the Visual Studio Command Prompt:

for /f "tokens=* delims=," %l in (<path to groups.txt>) do tfssecurity /imx "%l" /server:http://<tfs>:8080 >> <path to mygroupaudit.txt>

this simple command is going to execute tfssecurity /imx for each group and, thanks to the >> sign it is going to append the tfssecurity’s output to the mygroupaudit.txt file. I usually launch it on an unattended machine and keep it running on the background until it finishes.

Then in order to get a basic but polished report, open the resulting file in Word, and change the font setting to Bold for these four lines, except for the very first on top which are IMHO useful for a nice presentation:

Done.

Microsoft (R) TFSSecurity - Team Foundation Server Security Tool

Copyright (c) Microsoft Corporation. All rights reserved.

The target Team Foundation Server is http://<tfs>:8080/.

Word has a very useful feature for this needs: select text with similar formatting. Do that and wipe those lines away! You’ll get something neat and polished – change fonts, and add whatever else you need and you’ll get an Audit Report in five minutes :)

Of course it’s possible to use that file in a better way, but these basic tips will give you something in a near-to-none time!

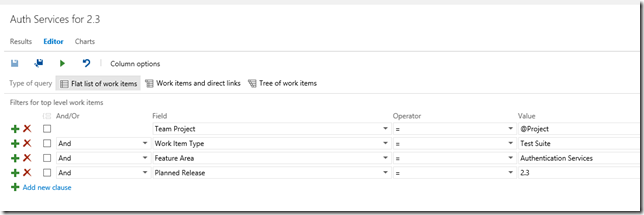

Going slightly deeper in the reporting technologies – PowerPivot is perfect for that. You just need to load the .csv file and you have a dynamic model perfect for drill-down queries. With this:

you’re going to get this chart:

you’re going to get this chart:

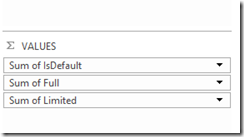

which is pretty basic. But what if you want to know how many users with Full Access (which require a CAL as we know) accessed the server on a specific date…well, you can create a chart having Last Accessed UTC on the Axis as well as on a Slicer, so you can filter your timeframe – and Sum of Full on the Values:

But as it is PowerPivot, you can drill down as you wish…so if from my dates selection I select just today at 13:09 (where this is the only access for today on this test instance, by me) and I drill down by Display Name what I get is …

…a big pie with just me as a value:

These are just easy samples, but as soon as you get that this csv data is a data source…the whole reporting world will welcome you!