Despite the push we’ve seen in the last few years, the Hosted Build Service might not be the right product for you for whatever reason.

Then, if you are in a situation where your agents aren’t running in the same domain as Team Foundation Server’s and you want to use the Test Agent then you really risk opening the Pandora’s box, courtesy of WinRM and PowerShell remoting.

And to be completely clear – I have nothing against them the only downside is that they need to be approached in the right way, otherwise the can-of-worms effect is just behind the corner.

First and foremost, remember that whenever you target a machine for Test Agent deployment you only need to consider the Build Agent-Test Agent relationship. All the errors you will get are going to be from the Test box, not the build box.

So when you need to configure WinRM, the Test box is the machine that is going to be accepting the connections. While it sounds straightforward, sometimes things happen and one is tempted to look at the Build box first: don’t.

Also, if you really want to use HTTP and WinRM, remember that this is the trickiest combination – so think twice before going down that route!

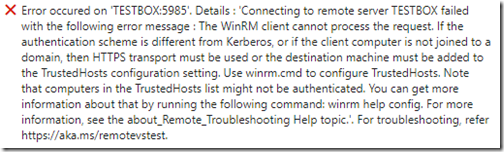

Then in terms of errors – you will likely face WinRM errors of all sorts. The most common is this:

If you are outside a domain then REMEMBER about Shadow Accounts – it is the only way to keep identity issues to a minimum. You’ll also need to set the TrustedHosts value to the machines pushing the agent.

Then this:

Remember that passwords need to match, and that mixing users at setup time isn’t really a good idea if you are going down the workgroup/non-trusted domain route.

Always triple check passwords, and I recommend to use the same account for both provisioning and execution, at least as a baseline. This will make sure you have a safety net incase things don’t pan out as expected.

Eventually there is this error, that really puzzles me:

This is actually an aggregated exception:

Look at UAC and execution context for this – it always happens when you are not running stuff as Administrator when that’s supposed to be elevated. It always drives me mad.