Among the many things I do I manage a SonarQube instance. Not a big deal to be fair with you, but it is a valuable tool and it has its quirks. You need to spend time on it.

So I thought about automating this process a little bit. It is a bit unusual, but it brings some value, so why not!

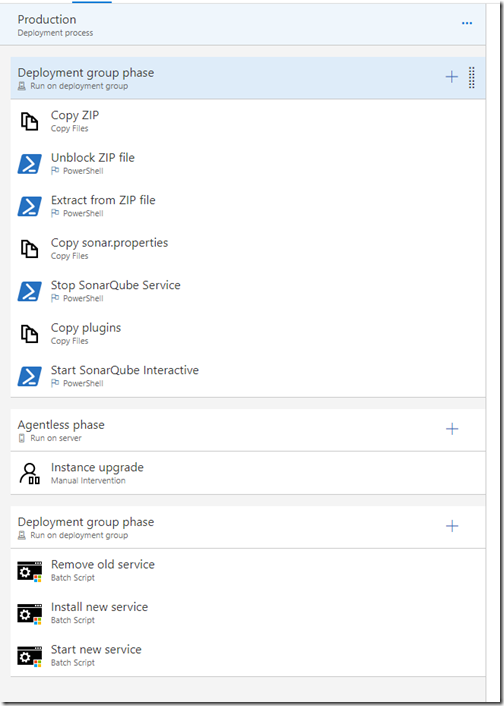

The result is a TFS or VSTS Team Project with a TFVC repository (TFVC is perfect for handling binary files!) and two Release Definitions, one for Test and one for Production.

The reason why there are two Definitions is because – oddly enough – the Test one came after the Production one (which is easier, you’ll discover why later on). I might revamp the whole thing in the future to have sequential environments, but this is it as of now

In the TFVC repository you are going to find folders for each SonarQube version I deployed on my server, together with the relevant sonar.properties file filled with the values you want, and a scripts folder with some utility scripts.

The reason why I am not automating the configuration file creation (via a find-and-replace operation for example) is because you are explicitly told by SonarSource not to just replace this file with an existing version but to start from scratch.

While testing your configuration you will need to work on it anyway, so it is a good idea to put it in a repository, and you will get versioning for free as well. Bonus.

Both my Release Definitions feature a Deployment Group: guess what, it contains my SonarQube server  I also leveraged on Tags, in case I might want to have completely separate enviromnents, as the Deployment Group phases are marked to run only on machines that sport the right tag for the Release Definition. It isn’t the case for now though.

I also leveraged on Tags, in case I might want to have completely separate enviromnents, as the Deployment Group phases are marked to run only on machines that sport the right tag for the Release Definition. It isn’t the case for now though.

Now it comes the fun part, let’s start with the test upgrades. My process for testing SonarQube is as it follows:

- Restore a backup of the production database

- Get the new SonarQube version on the VM that hosts its services

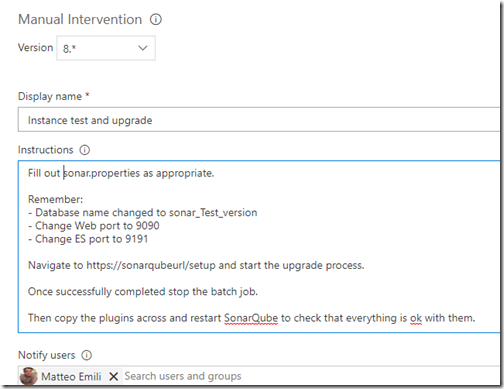

- Extract the new version, set the right values in the sonar.properties file (like different ports and java switches)

- Check that the upgrade runs successfully

- Verify all the involved plugins

Like I said I am not going to automatically find and replace values in the sonar.properties file, and the latter steps aren’t really worth scripting, but the first two steps can benefit from an automated process.

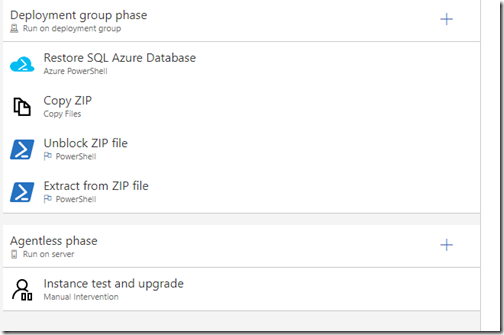

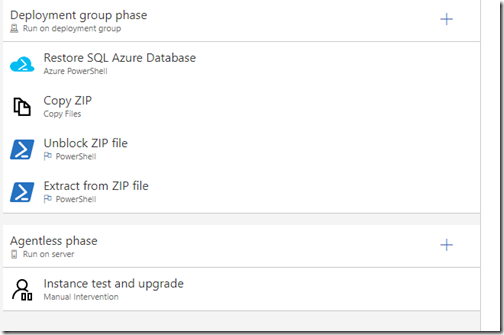

This is what my testing pipeline looks like:

Nothing too fancy, but it saves time.

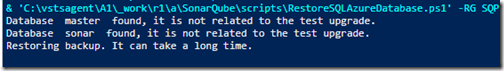

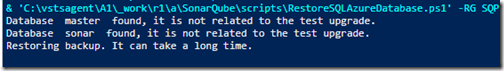

The cool bit here IMHO is the Azure PowerShell script I am running to restore the database: given the Resource Group, Server, Database and SonarQube version (which is used to form the name) I can check if I already have a testing database – if not it starts restoring a backup copy from ten minutes before.

If this prerequisite check fails, I integrated the error handling so it stops the task immediately and marks the release as failed:

How? Like this:

The Write-Error statement stops the task execution and raises the error message, the Write-Host statement with the specific ##vso line marks the task result as failed and the exit 1 line terminates the sessions so that whatever is next (the database restore!) is not executed.

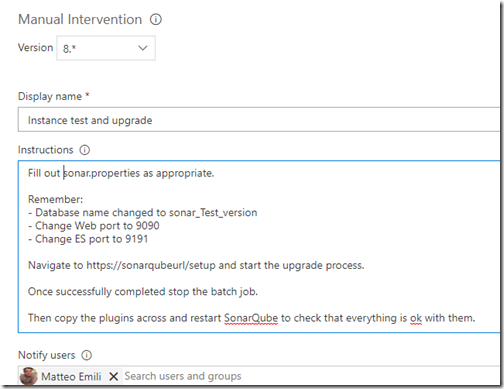

Eventually at the end there is an Agentless phase which is just manual intervention with the required things to do:

I will go through the production pipeline in the next post, as it is different